Ever since GPT-5 dropped, the AI world hasn’t stopped talking about the sheer range of things it can do. Coding, writing assistance, image generation, even acting as an autonomous agent, it’s like having all the things a chatbot can do at one place. But is GPT-5 actually good? Does it really outperform previous OpenAI models? Since the launch, I’ve been experimenting GPT-5 with diverse prompts. I’ve listed some of them below so you can try them too and see how the model actually performs.

Before we jump into the prompts, check out this detailed article on what GPT-5 is and how it is different from previous OpenAI models.

Let’s start going though the tasks one by one:

Purpose: A shared tool to track daily posting progress across platforms, celebrate completions, and maintain consistency.

Users & Roles:

- Nitika (Social Media Manager) – Oversees all platforms

- Harshit (LinkedIn Manager) – Posts: 4/day

- Riya (Instagram Manager) – Posts: 4/day

Key Features:

✅ Daily Goal Tracking: Visual counter for planned vs. completed posts (4/day/platform).

✅ Confetti Celebration: Instant animated confetti when a post is logged as “done.”

✅ Simple Interface: Color-coded by platform (e.g., LinkedIn = blue, Instagram = purple).

✅ Collaboration: Notes section for each post to share links or comments.Example Workflow:

- Harshit logs a LinkedIn post → counter updates → CONFETTI!

- Dashboard shows: *”3/4 posts done for LinkedIn | 1/4 for Instagram”*.

Bonus: Weekly summary report auto-generated every Friday.

Output:

Observation:

The social media tracker prototype perfectly executes all requested features—clear task assignments, accurate post tracking (4/day/platform), and satisfying confetti animations upon completion. The inclusion of both daily progress views and weekly summaries makes it practical for team coordination. With its clean interface and well-documented JSON structure (including platform-specific color codes and motivational prompts), this serves as an excellent developer reference. Minor enhancements like post-type categorization could strengthen V2, but the current version already delivers a solid foundation for production.

Task 2: Create a Guess the Word Game

Create a cute and interactive UI for a “Guess the Word” game where the player knows a secret word and provides 3 short clues (max 10 words each). The AI then has 3 attempts to guess the word. If the AI guesses correctly, it wins; otherwise, the player wins.

Output:

Observation:

While the game delivers a fun experience with its cute UI and smooth gameplay, it currently lacks the core feature where the player can input the secret word for the AI to guess. Implementing this would make it fully align with the original prompt. That said, the confetti celebration, clean design, and responsive feedback make it an engaging prototype. With the word-input mechanic added, this could be a perfect 10/10!

Task 3: Exam Prepration

I’m preparing for an exam on Agentic AI and have covered basic/intermediate topics like:

- Definition and core principles of Agentic AI

- Differences between SLMs and LLMs in agentic systems

- Role of reinforcement learning in autonomous agents

- Ethical considerations in agentic AI deployment

- NVIDIA’s research on SLMs for agentic workflows

Create a 10-question MCQ test with:

- 4 options per question (single correct answer)

- Final score report with % correct

- Detailed explanation for any wrong answers, citing sources

Output:

Observation:

Wow! Killer MCQ test for Agentic AI prep! Short but powerful questions nail all key concepts – autonomy, tools, ethics. Instant feedback explains every answer with real examples (like how agentic AI books trips differently than chatbots). Perfectly mimics exams with 60-second timed questions. Paste your syllabus to customize it. 10/10 for making study fun AND effective. Best exam hack ever!

Task 4: Operational Tasks

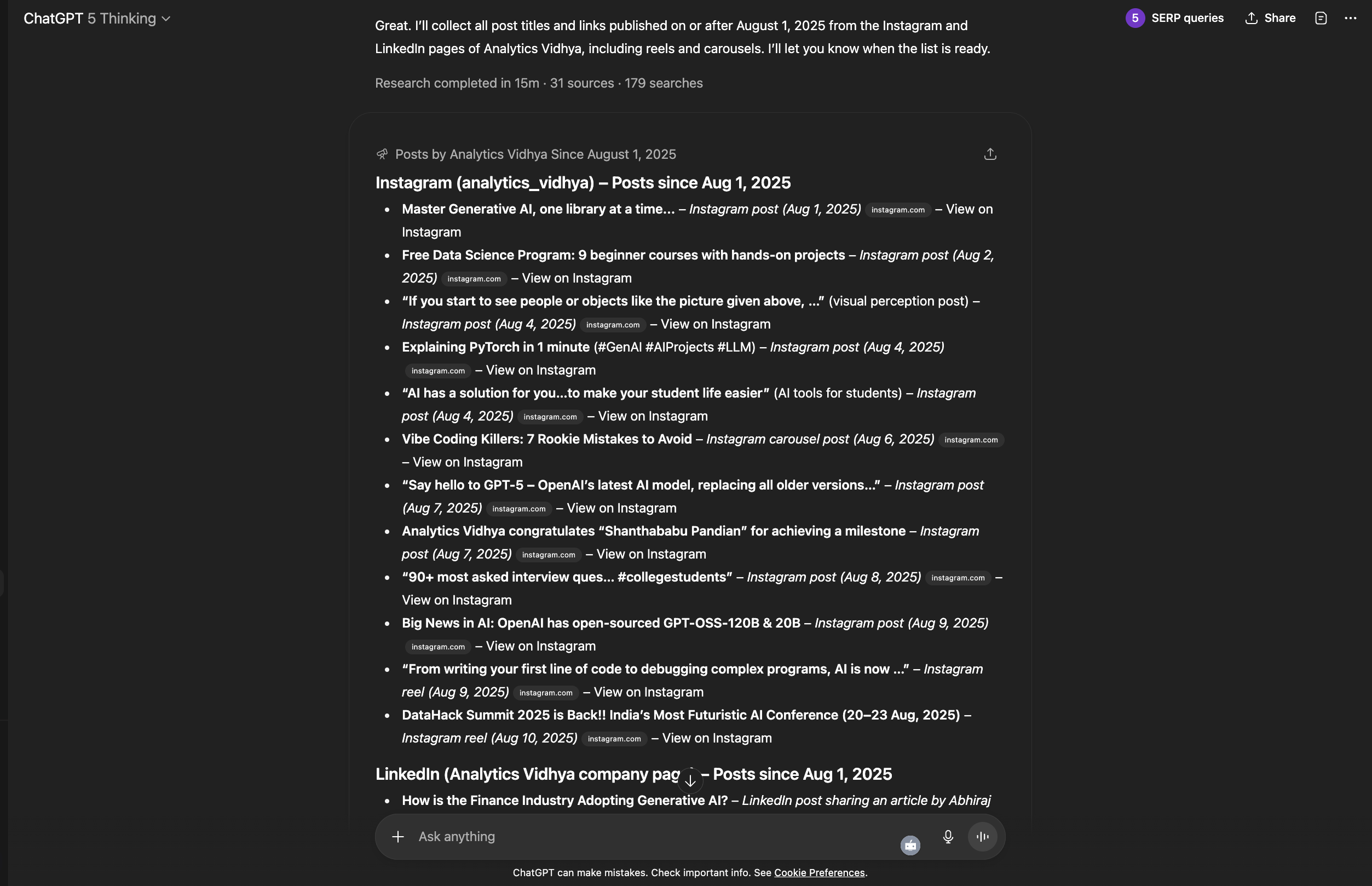

I had to fill some trackers for the weekly analysis, instead of doing it manually, I asked GPT-5 to get the information for me.

Give me list of all the posts and their link posted on these channel on and afater 1st of august 2025 – https://www.linkedin.com/company/analytics-vidhya/

Output format is a table – Date | Post_url | platform

Output:

Observation:

I attempted to automate data collection for weekly analysis by asking GPT-5 to retrieve posts from Analytics Vidhya’s Instagram and LinkedIn (posted on or after August 1, 2025). The output was incomplete, while both platforms typically publish 4 posts per day (totaling ~25–32 posts per platform for the period), GPT-5 returned far fewer entries.

Since the GPT-5 failed to capture the full dataset accurately, I went to Manus AI and got the task done!

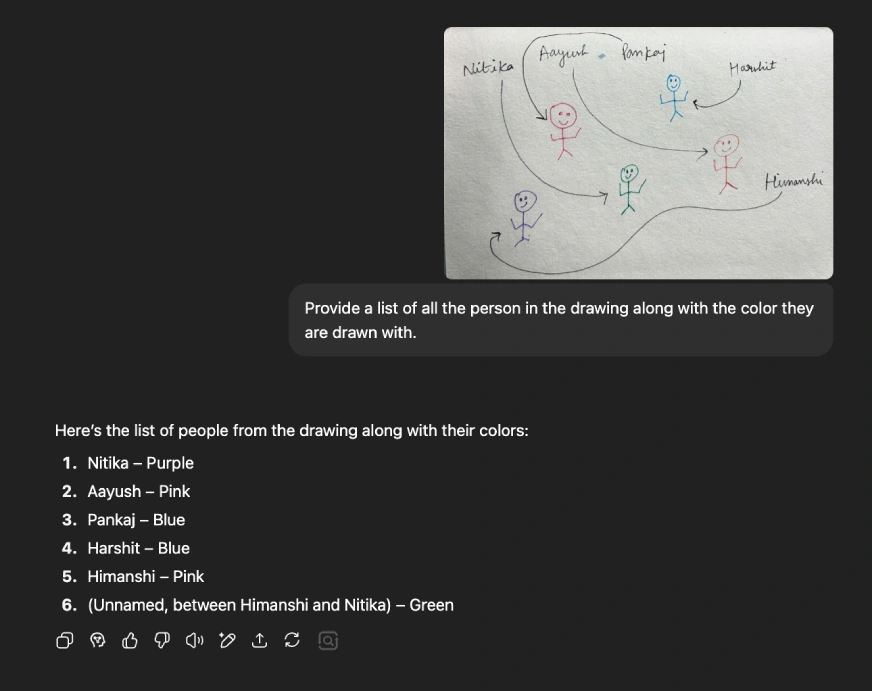

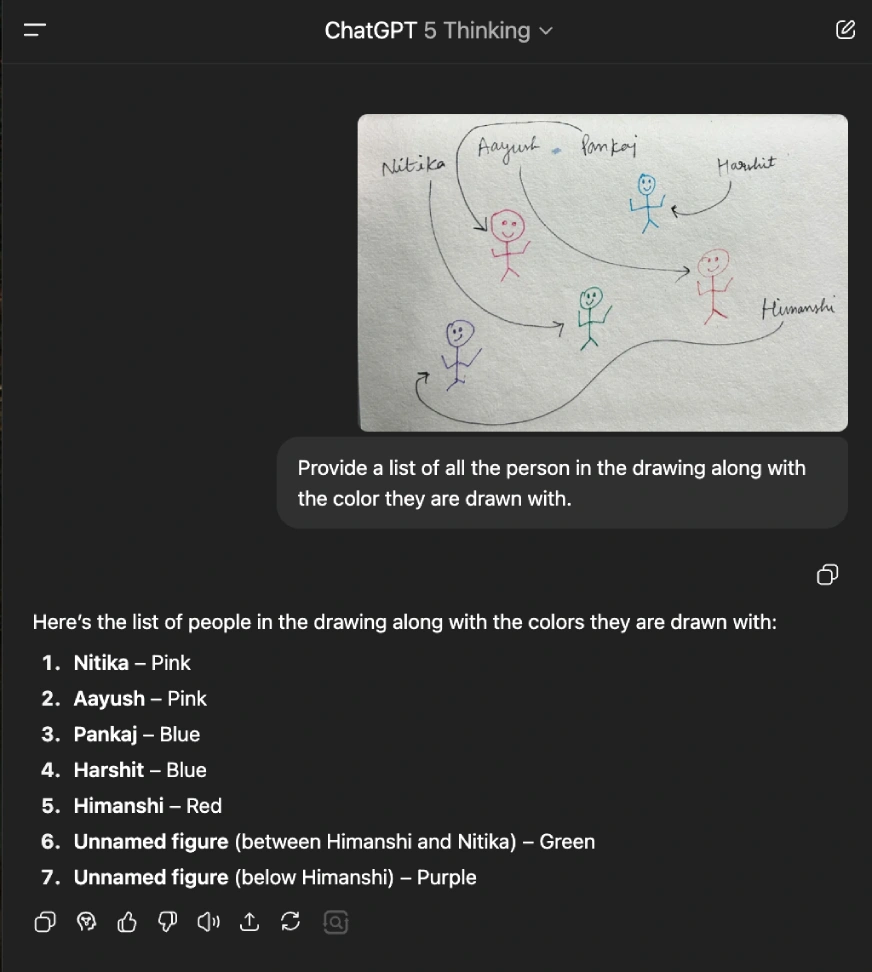

Task 5: Reasoning and Image Analysis

I previously tried this task with OpenAI’s o3 and o4-mini and both failed at it. To know more checkout my previous blog on – 6 o3 Prompts You Must Try Today. Let’s see if GPT-5 is able to solve this!

Provide a list of all the person in the drawing along with the color they are drawn with.

Output:

Incorrect answer. Also, as this was a reasoning question the GPT-5 thinking mode should have answered to this, but the answer was given by the normal GPT-5 version. I selected the thinking mode manually to see if it can answer better. Here’s the output:

The response remains incorrect despite using Thinking Mode. Based on this performance, GPT-5’s reasoning capabilities don’t appear to meet OpenAI’s advertised benchmarks for this type of complex query. I expected more accurate results.

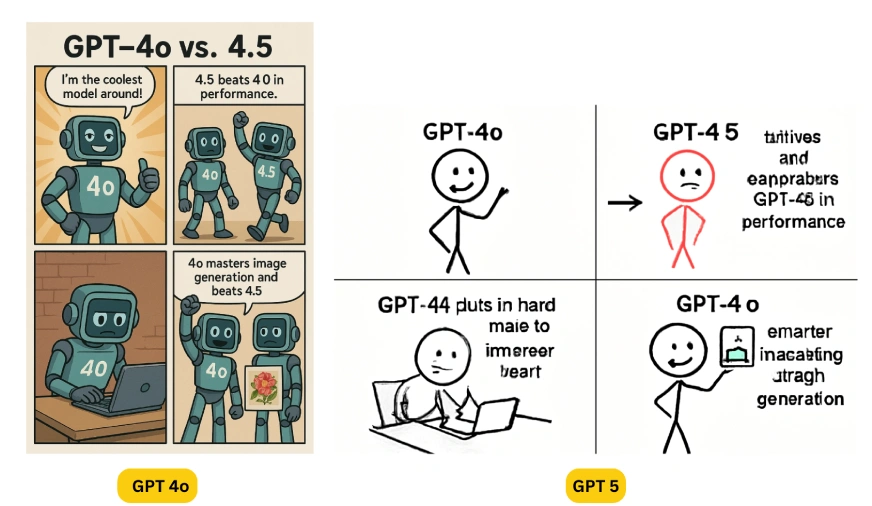

Task 6: Image Generation

Again, I am trying to compare the image generation abilities of GPT-5 vs GPT 4o. I previously tried the following prompt in my old article on – 4o Image Generation is SUPER COOL.

Create a 4-image story based on the following sequence:

GPT-4o believes it’s the coolest model out there.

GPT-4.5 arrives and surpasses GPT-4o in performance.

GPT-4o puts in hard work to improve itself.

GPT-4o becomes smarter by mastering image generation.

Output:

Observation:

It’s clear that GPT-5’s image generation represents a significant step backward from GPT-4o. The model struggles with:

- Text Rendering – Fails to accurately incorporate or display text within images

- Image Quality – Produces noticeably lower-resolution outputs with more artifacts

- Prompt Adherence – Frequently misunderstands or ignores specific requests

For a supposedly improved model, these regressions in core functionality are unacceptable.

End Note

While GPT-5 performs well on coding tasks, its shortcomings in reasoning, image generation, and general assistance (previously ChatGPT’s strongest selling points) make it a downgrade for most practical uses. The appeal of ChatGPT was its versatility as an AI assistant for everyday tasks, not just coding (where specialized tools already exist).

Personally, I found the overall experience underwhelming, the model failed to deliver the tangible value I’d come to expect from previous versions (like o3’s reasoning or GPT-4o’s image generation). The lack of model transparency (no visible indicator of which version is generating responses) only adds to the uncertainty.

Try out some prompts yourself in GPT 5 and let me know your feedback in the comment section below.

Login to continue reading and enjoy expert-curated content.