(TippaPatt/Shutterstock)

AI might be driving the bus when it comes to IT investments. But as companies struggle with their AI rollouts, they’re realizing that issues with the data are what’s holding them back. That’s what’s leading Databricks to make investments in core data engineering and operations capabilities, which manifested this week with the launch of its Lakeflow Designer and Lakebase products this week at its Data + AI Summit.

Lakeflow, which Databricks launched one year ago at its 2024 conference, is essentially an ETL tool that enables customers to ingest data from different systems, including databases, cloud sources, and enterprise apps, and then automate the deployment, operation, and monitoring of the data pipelines.

While Lakeflow is great for data engineers and other technical folks who know how to code, it’s not necessarily something that business folks are comfortable using. Databricks heard from its customers that they wanted more advanced tooling that allowed them to build data pipelines in a more automated manner, said Joel Minnick, Databricks’ vice president of marketing.

“Customers are asking us quite a bit ‘Why is there this choice between simplicity and business focus or productionization? Why do they have to be different things?’” he said. “And we said as we kind of looked at this, they don’t have to be different things. And so that’s what Lakeflow Designer is, being able to expand the data engineering experience all the way into the non-technical business analysts and give them a visual way to build pipelines.”

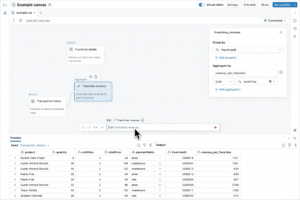

Databricks’ new Lakeflow Designer features GUI and NLP interfaces for data pipeline development

Lakeflow Designer is a no-code tool that allows users to create data pipelines in two different ways. First, they can use the graphical interface to drag and drop sources and destinations for the data pipelines using a directed acyclic graph (DAG). Alternatively, they can use natural language to tell the product the type of data pipeline they want to build. In either case, Lakeflow Designer is utilizing Databricks Assistant, the company’s LLM-powered copilot, to generate SQL to build the actual data pipelines.

Data pipelines built by Lakeflow Designer are treated identically to data pipelines built in the traditional manner. Both benefit from the same level of security, governance, and lineage tracking that human-generated code would have. That’s due to the integration with Unity Catalog in Lakeflow Designer, Minnick said.

“Behind the scenes, we talk about this being two different worlds,” he said. “What’s happening as you’re going through this process, either dragging and dropping yourself or just asking assistant for what you need, is everything is underpinned by Lakeflow itself. So as all that ANSI SQL is being generated for you as you’re going through this process, all those connections in the Unity Catalog make sure that this has lineage, this has audibility, this has governance. That’s all being set up for you.”

The pipelines created with Lakeflow Designer are extensible, so at any time, a data engineer can open up and work with the pipelines in a code-first interface. Conversely, any pipelines originally developed by a data engineer working in lower-level SQL can be modified using the visual and NLP interfaces.

“At any time, in real time, as you’re making changes on either side, those changes in the code get reflected in designer and changes in designer get reflected in the code,” Minnick said. “And so this divide that’s been between these two teams is able to completely go away now.”

Lakeflow Designer will be entering private preview soon. Lakeflow itself, meanwhile, is now generally available. The company also announced new connectors for Google Analytics, ServiceNow, SQL Server, SharePoint, PostgreSQL, and SFTP.

In addition to enhancing data integration and ETL–long the bane of CIOs–Databricks is looking to move the ball forward in another traditional IT discipline: online transaction processing (OLTP).

Databricks has been focused primarily on advanced analytics and AI since it was founded in 2013 by Apache Spark creator Matei Zaharia and others from the University of California AMPlab. But with the launch of Lakebase, it’s now getting into the Postgres-based OLTP business.

Lakebase is based on the open source, serverless Postgres database developed by Neon, which Databricks acquired last month. As the company explained, the rise of agentic AI necessitated a reliable operational database to house and serve data.

“Every data application, agent, recommendation and automated workflow needs fast, reliable data at the speed and scale of AI agents,” the company said. “This also requires that operational and analytical systems converge to reduce latency between AI systems and to provide enterprises with current information to make real-time decisions.”

Databricks said that, in the future, 90% of databases will be created by agents. The databases spun up in an on-demand basis by Databricks AI agents will be Lakebase, which the company says will be able to launched in less than a second.

It’s all about bridging the worlds of AI, analytics, and operations, said Ali Ghodsi, Co-founder and CEO of Databricks.

“We’ve spent the past few years helping enterprises build AI apps and agents that can reason on their proprietary data with the Databricks Data Intelligence Platform,” Ghodsi stated. “Now, with Lakebase, we’re creating a new category in the database market: a modern Postgres database, deeply integrated with the lakehouse and today’s development stacks.”

Lakebase is in public preview now. You can read more about it at a Databricks blog.

Related Items:

Databricks Wants to Take the Pain Out of Building, Deploying AI Agents with Bricks

Databricks Nabs Neon to Solve AI Database Bottleneck

Databricks Unveils LakeFlow: A Unified and Intelligent Tool for Data Engineering