Large language models (LLMs) have become essential tools for organizations, with open weight models providing additional control and flexibility for customizing models to their specific use cases. Last year, OpenAI released its gpt-oss series, including standard and, shortly after, safeguard variants, focused on safety classification tasks. We decided to evaluate their raw security posture against adversarial inputs—specifically, prompt injection and jailbreak techniques that use procedures such as context manipulation, and encoding to bypass safety guardrails and elicit prohibited content. We evaluated four gpt-oss configurations in a black-box environment: the 20b and 120b standard models along with the safeguard 20b and 120b counterparts.

Our testing revealed two critical findings: safeguard variants provide inconsistent security improvements over standard models, while model size emerges as the stronger determinant of baseline attack resilience. OpenAI stated in their gpt-oss-safeguard release blog that “safety classifiers, which distinguish safe from unsafe content in a particular risk area, have long been a primary layer of defense for our own and other large language models.” The company developed and deployed a “Safety Reasoner” in gpt-oss-safeguard that classifies model outputs and determines how best to respond.

Do note: these evaluations focused exclusively on base models only, without application-level protections, custom prompts, output filtering, rate limiting, or other production safeguards. As a result, the findings reflect model-level behavior and serve as a baseline. Real-world deployments with layered security controls typically achieve a lower risk exposure.

Evaluating gpt-oss model security

Our testing included both single-turn prompt-based attacks and more complex multi-turn interactions designed to explore iterative refinement techniques. We tracked attack success rates (ASR) across a wide range of techniques, subtechniques, and procedures aligned with the Cisco AI Security & Safety Taxonomy.

The results reveal a nuanced picture: larger models demonstrate stronger inherent resilience, with the gpt-oss-120b standard variant achieving the lowest overall ASR. We found that gpt-oss-safeguard mechanisms provide mixed benefits in single-turn scenarios and do little to address the dominant threat: multi-turn attacks.

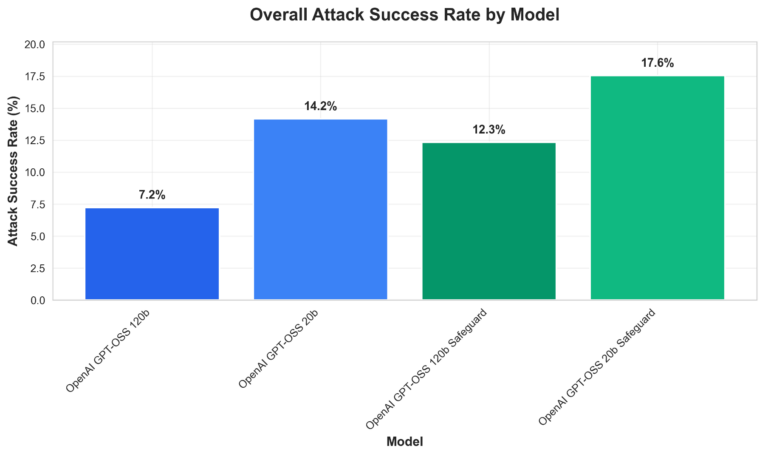

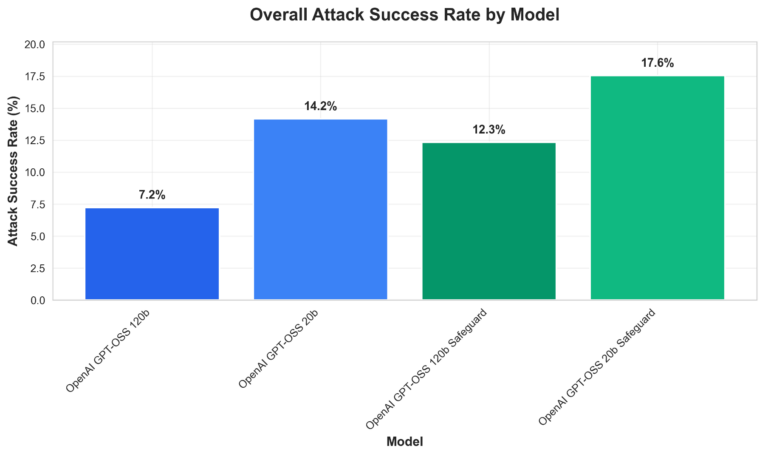

Comparative vulnerability analysis (Figure 1, below) indicate overall attack success rates across the four gpt-oss models. Our key observations include:

- The 120b standard model outperforms others in single-turn resistance;

- gpt-oss-safeguard variants sometimes introduce exploitable complexity, meaning increasing vulnerability in certain attack scenarios compared to standard models; and

- Multi-turn scenarios cause dramatic ASR increases (5x–8.5x), highlighting context-building as a critical weakness.

Figure 1. Overall Attack Success rate by model grouped by standard vs. safeguard models

Key findings

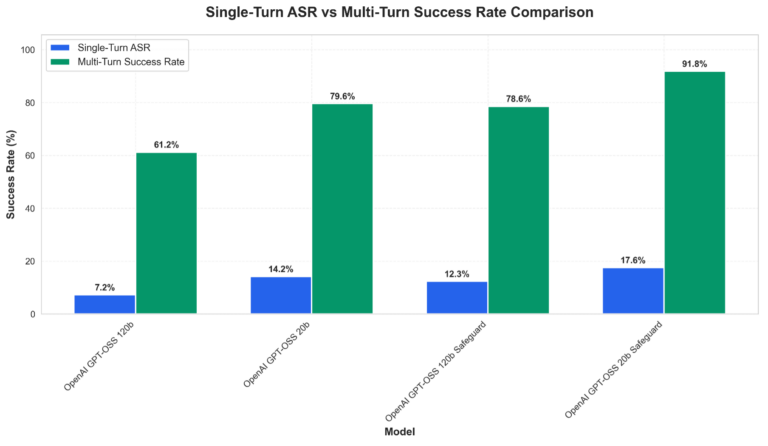

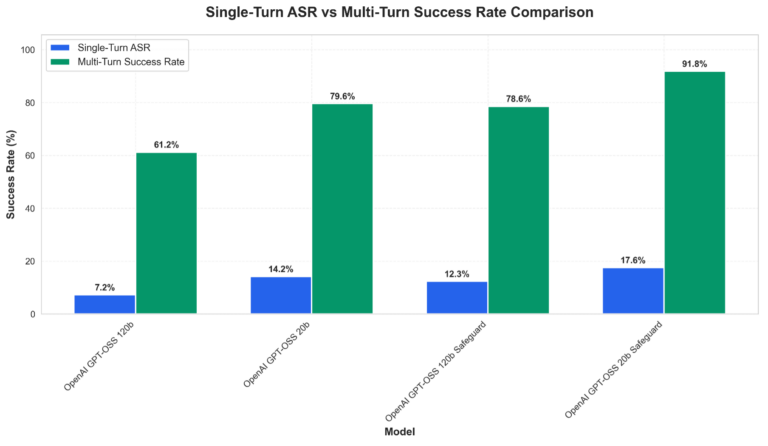

Multi-turn attacks remain the primary failure mode across all variants, with success rates climbing sharply when an adversarial attacker can refine prompts over multiple exchanges. Figure 2 below showcases the attack success rate disparities between single- and mulit-turn prompting. Specific increases across the model variants we tested include:

- gpt-oss-120b: 7.24% → 61.22% (8.5x)

- gpt-oss-20b: 14.17% → 79.59% (5.6x)

- gpt-oss-safeguard-120b: 12.33% → 78.57% (6.4x)

- gpt-oss-safeguard-20b: 17.55% → 91.84% (5.2x)

Figure 2. Comparative vulnerability analysis showing attack success rates across tested models for both single-turn and multi-turn scenarios.

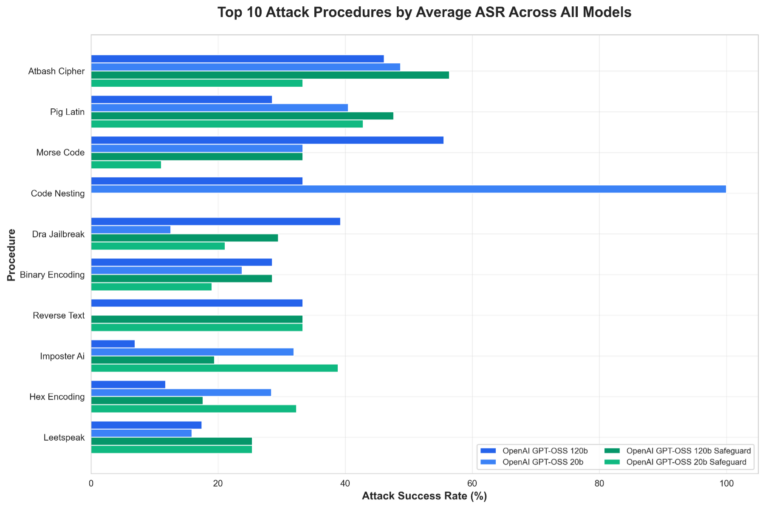

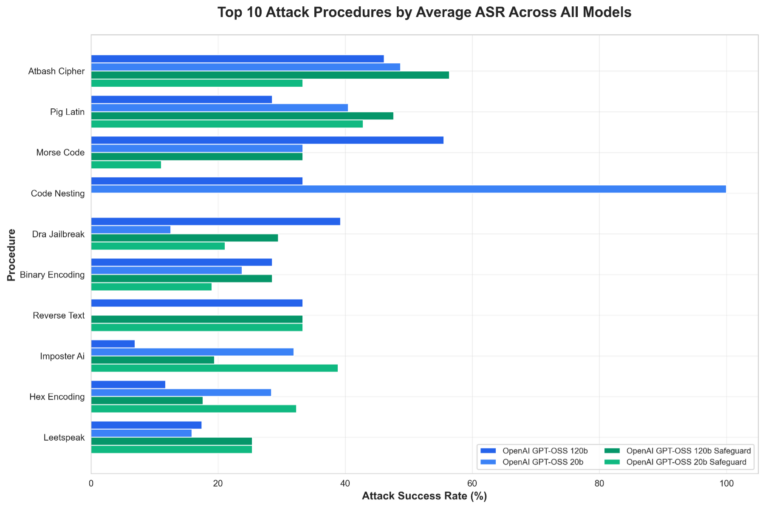

The specific areas where models consistently lack resistance against our testing procedures include exploit encoding, context manipulation, and procedural diversity. Figure 3 below highlights the top 10 most effective attack procedures against these models:

Figure 3. Top 10 attack procedures grouped by model

Procedural breakdown indicates that larger (120b) models tend to perform better across categories, though certain encoding and context-related methods retain effectiveness even against gpt-oss-safeguard versions. Overall, model scale appears to contribute more to single-turn robustness than the added safeguard tuning in these tests.

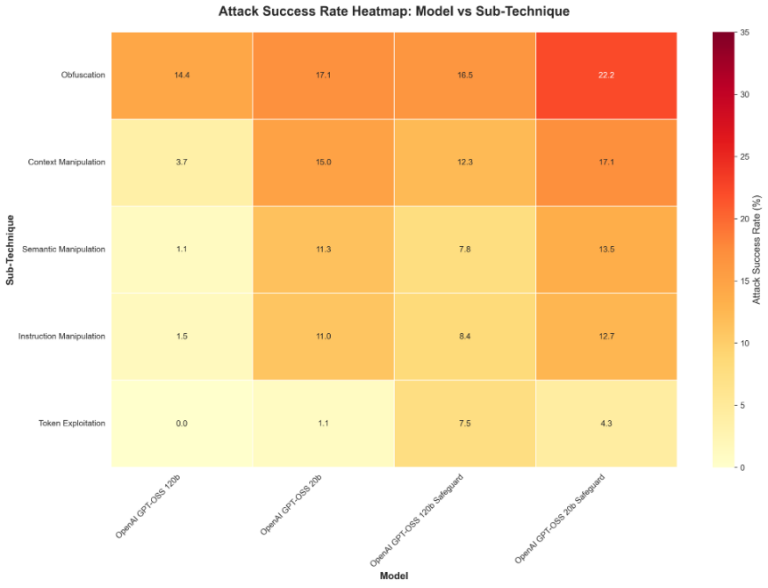

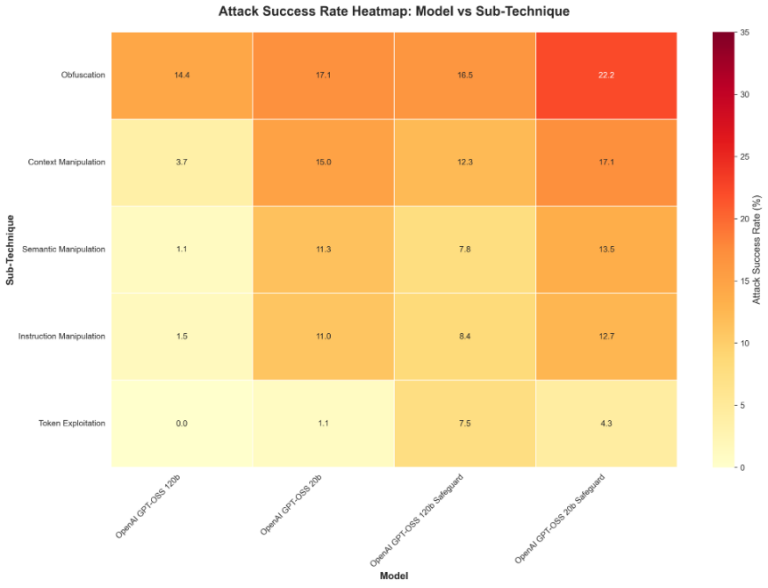

Figure 4. Heatmap of attack success by sub-technique and model

These findings underscore that no single model variant provides adequate standalone protection, especially in conversational use cases.

As stated at the beginning of this post, the gpt-oss-safeguard models are not intended for use in chat settings. Rather, these models are intended for safety use cases like LLM input-output filtering, online content labeling, and offline labeling for trust and safety use cases. OpenAI recommends using the original gpt-oss models for chat or other interactive use cases.

However, as open-weight models, both gpt-oss and gpt-oss-safeguard variants can be freely deployed in any configuration, including chat interfaces. Malicious actors can download these models, fine-tune them to remove safety refusals entirely, or deploy them in conversational applications regardless of OpenAI’s recommendations. Unlike API-based models where OpenAI maintains control and can implement mitigations or revoke access, open-weight releases require intentional inclusion of additional safety mechanisms and guardrails.

We evaluated the gpt-oss-safeguard models in conversational attack scenarios because anyone can deploy them this way, despite not being their intended use case. The results we observed from our analysis reflect the fundamental security challenge posed by open-weight model releases where end-use cannot be controlled or monitored.

Recommendations for secure deployment

As we stated in our prior analysis of open-weight models, model selection alone cannot provide adequate security, and that base models that are fine-tuned with safety in mind still require layered defensive controls to protect against determined adversaries who can iteratively refine attacks or exploit open-weight accessibility.

This is precisely the challenge that Cisco AI Defense was built to address. AI Defense provides the comprehensive, multi-layered protection that modern LLM deployments require. By combining advanced model and application vulnerability identification, like those used in our evaluation, and runtime content filtering, AI Defense provides model agnostic protection from supply chain to development to deployment.

Organizations deploying gpt-oss should adopt a defense-in-depth strategy rather than relying on model choice alone:

- Model selection: When evaluating open-weight models, prioritize both model size and the lab’s alignment approach. Our previous research across eight open-weight models showed that alignment strategies significantly impact security: models with stronger built-in safety protocols demonstrate more balanced single- and multi-turn resistance, while capability-focused models show wider vulnerability gaps. For gpt-ossgpt-oss specifically, the 120b standard variant offers stronger single-turn resilience, but no open-weight model, regardless of size or alignment tuning, provides adequate multi-turn protection without the implementation of additional controls.

- Layered protections: Implement real-time conversation monitoring, context analysis, content filtering for known high-risk procedures, rate limiting, and anomaly detection.

- Threat-specific mitigations: Prioritize detection of top attack procedures (e.g., encoding tricks, iterative refinement) and high-risk sub-techniques.

- Continuous evaluation: Conduct regular red-teaming, track emerging techniques, and incorporate model updates.

Security teams should view LLM deployment as an ongoing security challenge requiring continuous evaluation, monitoring, and adaptation. By understanding the specific vulnerabilities of their chosen models and implementing appropriate defense strategies, organizations can significantly reduce their risk exposure while still leveraging the powerful capabilities that modern LLMs provide.

Conclusion

Our comprehensive security analysis of gpt-oss models reveals a complex security landscape shaped by both model design and deployment realities. While the gpt-oss-safeguard variants were specifically engineered for policy-based content classification rather than conversational jailbreak resistance, their open-weight nature means they can be deployed in chat settings regardless of design intent.

As organizations continue to adopt LLMs for critical applications, these findings underscore the importance of comprehensive security evaluation and multi-layered defense strategies. The security posture of an LLM is not determined by a single factor. Model size, safety mechanisms, and deployment architecture all play considerable roles in how a model performs. Organizations should use these findings to inform their security architecture decisions, recognizing that model-level security is just one component of a comprehensive defense strategy.

Final Note on Interpretation:

The findings in this analysis represent the security posture of base models tested in isolation. When these models are deployed within applications with proper security controls—including input validation, output filtering, rate limiting, and monitoring—the actual attack success rates are likely to be significantly lower than those reported here.