(Shuttestock AI)

The past few years have seen AI expand faster than any technology in modern memory. Training runs that once operated quietly inside university labs now span massive facilities packed with high-performance computers, tapping into a web of GPUs and vast volumes of data.

AI essentially runs on three ingredients: chips, data and electricity. Among them, electricity has been the most difficult to scale. We know that each new generation of models is more powerful and often claimed to be more power-efficient at the chip level, but the total energy required keeps rising.

Larger datasets, longer training runs and more parameters drive total power use much higher than was possible with earlier systems. The plethora of algorithms has given way to an engineering roadblock. The next phase of AI progress will rise or fall on who can secure the power, not the compute.

In this part of our Powering Data in the Age of AI series, we’ll look at how energy has become the defining constraint on computational progress — from the megawatts required to feed training clusters to the nuclear projects and grid innovations that could support them.

Understanding the Scale of the Energy Problem

The International Energy Agency (IEA) calculated that data centers worldwide consumed around 415 terawatt hours of electricity in 2024. That number is going to nearly double, to around 945 TWh by 2030, as the demands of AI workloads continue to rise. It has grown at 12% per year over the last five years.

Fatih Birol, the executive director of the IEA, called AI “one of the biggest stories in energy today” and said that demand for electricity from data centers could soon rival what countries use all together.

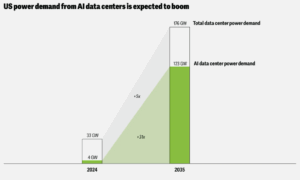

Power Demand from US AI Data Centers Expected to Boom (Credits: deloitte.com)

“Demand for electricity around the world from data centres is on course to double over the next five years, as information technology becomes more pervasive in our lives,” Birol said in a statement released with the IEA’s 2024 Energy and AI report.

“The impact will be especially strong in some countries — in the United States, data centres are projected to account for nearly half of the growth in electricity demand; in Japan, over half; and in Malaysia, one-fifth.”

Already, that shift is transforming the way and place power gets delivered. The tech giants are not only building data centers for proximity or network speed. They are also chasing stable grids, low cost electricity and space for renewable generation.

According to Lawrence Berkeley National Laboratory research, data centers are expected to consume roughly 176 terawatt hours of electricity just in the US in 2023, or about 4.4% of the total national demand. The buildout is not slowing down. By the end of the decade, new projects could drive consumption to almost 800 TWh, as more than 80 gigawatts of extra capacity is projected to go online — provided they are completed in time.

Deloitte projects that power demand from AI data centers will climb from about 4 gigawatts in 2024 to roughly 123 gigawatts by 2035. Given these projects, it is no great surprise that now power dictates where the next cluster will be built, not fiber routes or tax incentives. In some areas, energy planners and tech companies are even negotiating directly to ensure a long-term supply. What was once a question of compute and scale has now become an issue of energy.

Why AI Systems Consume So Much Power

The reliance on energy is partly due to the reality that all layers of AI infrastructure run on electricity. At the core of every AI system is pure computation. The chips that train and run large models are the biggest energy draw by far, performing billions of mathematical operations every second. Google published an estimate that an average Gemini Apps text prompt uses 0.24 watt‑hours of electricity. You multiply that across the millions of text prompts everyday, and the numbers are staggering.

(3d_man/Shutterstock)

The GPUs that train and process these models consume tremendous power, nearly all of which is turned directly into heat (plus losses in power conversion). That heat has to be dissipated all the time, using cooling systems that consume energy.

That stability takes a lot of nonstop running of cooling systems, pumps and air handlers. A single rack of modern accelerators can consume 30 to 50 kilowatts — several times what older servers needed. Energy transports data, too: high-speed interconnects, storage arrays and voltage conversions all contribute to the burden.

Unlike older mainframe workloads that spiked and dropped with changing demand, modern AI systems operate close to full capacity for days or even weeks at a time. This constant intensity places sustained pressure on power delivery and cooling systems, turning energy efficiency from a simple cost consideration into the foundation of scalable computation.

Power Problem Growing Faster Than the Chips

Every leap in chip performance now brings an equal and opposite strain on the systems that power it. Each new generation from NVIDIA or AMD raises expectations for speed and efficiency, yet the real story is unfolding outside the chip — in the data centers trying to feed them. Racks that once drew 15 or 20 kilowatts now pull 80 or more, sometimes reaching 120. Power distribution units, transformers, and cooling loops all have to evolve just to keep up.

(Jack_the_sparow/Shutterstock)

What was once a question of processor design has become an engineering puzzle of scale. The Semiconductor Industry Association’s 2025 State of the Industry report describes this as a “performance-per-watt paradox,” where efficiency gains at the chip level are being outpaced by total energy growth across systems. Each improvement invites larger models, longer training runs, and heavier data movement — erasing the very savings those chips were meant to deliver.

To handle this new demand, operators are shifting from air to liquid cooling, upgrading substations, and negotiating directly with utilities for multi-megawatt connections. The infrastructure built for yesterday’s servers is being re-imagined around power delivery, not compute density. As chips grow more capable, the physical world around them — the wires, pumps, and grids — is struggling to catch up.

The New Metric That Rules the AI Era: Speed-to-Power

Inside the largest data centers on the planet, a quiet shift is taking place. The old race for pure speed has given way to something more fundamental — how much performance can be extracted per unit of power. This balance, sometimes called the speed-to-power tradeoff, has become the defining equation of modern AI.

It’s not a benchmark like FLOPS, but it now influences nearly every design decision. Chipmakers promote performance per watt as their most important competitive edge, because speed doesn’t matter if the grid can’t handle it. NVIDIA’s upcoming H200 GPU, for instance, delivers about 1.4 times the performance-per-watt of the H100, while AMD’s MI300 family focuses heavily on efficiency for large-scale training clusters. However, as chips get more advanced, so does the demand for more energy.

That dynamic is also reshaping the economics of AI. Cloud providers are starting to charge for workloads based not just on runtime but on the power they draw, forcing developers to optimize for energy throughput rather than latency. Data center architects now design around megawatt budgets instead of square footage, while governments from the U.S. to Japan are issuing new rules for energy-efficient AI systems.

That dynamic is also reshaping the economics of AI. Cloud providers are starting to charge for workloads based not just on runtime but on the power they draw, forcing developers to optimize for energy throughput rather than latency. Data center architects now design around megawatt budgets instead of square footage, while governments from the U.S. to Japan are issuing new rules for energy-efficient AI systems.

It may never appear on a spec sheet, but speed-to-power quietly defines who can build at scale. When one model can consume as much electricity as a small city, efficiency matters — and it’s showing in how the entire ecosystem is reorganizing around it.

The Race for AI Supremacy

As energy becomes the new epicenter of computational advantage, governments and companies that can produce reliable power at scale will pull ahead not only in AI but across the broader digital economy. Analysts describe this as the rise of a “strategic electricity advantage.” The concept is both straightforward and far-reaching: as AI workloads surge, the countries able to deliver abundant, low-cost energy will lead the next wave of industrial and technological growth.

(BESTWEB/Shutterstock)

Without faster investment in nuclear power and grid expansion, the US could face reliability risks by the early 2030s. That’s why the conversation is shifting from cloud regions to power regions.

Several governments are already investing in nuclear computation hubs — zones that combine small modular reactors with hyperscale data centers. Others are using federal lands for hybrid projects that pair nuclear with gas and renewables to meet AI’s rising demand for electricity. This is only the beginning of the story. The real question is not whether we can power AI, but whether our world can keep up with the machines it has created.

In the next parts of our Powering Data in the Age of AI series, we’ll explore how companies are turning to new sources of energy to sustain their AI ambitions, how the power grid itself is being reinvented to think and adapt like the systems it fuels, and how data centers are evolving into the laboratories of modern science. We’ll also look outward at the race unfolding between the US, China, and other countries to gain control over the electricity and infrastructure that will drive the next era of intelligence.

Related Items

Bloomberg Finds AI Data Centers Fueling America’s Energy Bill Crisis

Our Shared AI Future: Industry, Academia, and Government Come Together at TPC25

IBM Targets AI Inference with New Power11 Lineup