(Thapana_Studio/Shutterstock)

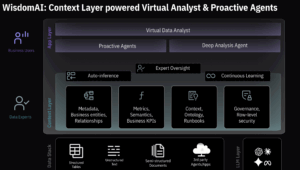

WisdomAI, one of the startups looking to drive semantic consistency into natural language query (NLQ), today launched a series of AI agents that will function as junior analysts to detect anomalies, prepare analyses, and execute decisions.

Four former Rubrik engineers–Soham Mazumdar, Sharvanath Pathak, Kapil Chhabra, and Guilherme Menezes–joined together to co-found WisdomAI in 2023 with the goal of addressing the practical challenges of using large language models (LLMs) to power analytics. It came out of stealth in May 2025 with a $23 million funding round led by Coatue and a vision to build the next-generation of AI-powered analytics tools.

Despite all of the investments in AI-powered BI since the ChatGPT revolution started nearly three years ago, we’re still largely in the stage of smart people using dumb tools to try to get value from data, Mazumdar explained in a recent interview.

“The modern data stack gets enormous amounts of investments, crazy valuations, yet if you look at the stack that lives above it, it’s largely remained. It’s much more boring. Not a whole lot has been happening there,” Mazumdar told BigDATAwire. “The overall penetration in terms of enterprises who use [these BI tools] is 100%. But in terms of people who’s who use them within these organizations, it’s far below what it could be.”

Nearly all of the value that BI tools like Tableau and PowerBI generate comes from the hands of “very smart humans,” Mazumdar said. Without smart data analysts, data scientists, and data engineers using their intellectual effort to squeeze insight from “dumb tools,” we would have far less insights into the data than we currently have.

WisdomAI CEO and co-founder Sohan Mazumdar

The Holy Grail today for AI-powered BI is to make NLQ work and finally democratize access to data insight. The problem is that there’s a gap between the intelligent people who use the tools and unintelligent AI tools, Mazumdar said. That creates a bottleneck, because there are only so many smart humans available to power the dumb tools.

Instead of trying to scale up intelligent humans to work more dumb tools, Mazumdar wants to imbue more intelligence into the tools themselves, so that less technical people can fetch their own analytics insights. While NLQ has improved in recent months, thanks to better language models, there are still challenges when it comes to trusting their output.

WisdomAI hopes to address this trust gap, and thus abolish the human bottleneck, by training a small language model directly on an organization’s data. This small model, which could fit on a laptop, would sit in front of the more capable LLM that resides in the cloud. The small model’s goal is to learn and understand the idiosyncrasies of the organization, including the context, the metrics, and ultimately the “tribal knowledge” that exists in each organization, Mazumdar said.

Mazumdar applauds the work being done on semantic layers to bridge the gap between data storage and human understanding. But he insists that a semantic layer sitting in between a database and a BI tool isn’t enough to overcome the challenges to making NLQ work on a more widespread basis.

“Looker is a great semantic layer,” he said. “But I can promise you that Looker’s semantic layer is simply not ready for AI. And it is not ready for AI because the Looker semantic layer exists for the human analyst to be able to manage the data well.”

What’s needed to overcome the trust gap and achieve semantic success, he said, is developing a full-blown BI tool that has the semantic layer baked in. In WisdomAI’s case, the semantic layer (or context layer as the company calls it), is integrated with the small language model. As context layer and its small language model is used and exposed to new business terms, it learns to identify how the business talks about its data.

The WisdomAI model functions as a virtual data analyst that uses the built-in context layer to helps users answer questions about their data, Mazumdar said.

“The key thing about the context layer is it’s continuously learning,” he said. “You can bootstrap, but it’s learning from usage. It is learning from feedback. It’s there to power the UX at the end of the day.”

In addition to a small AI model serving as a semantic layer, Wisdom brings AI guardrails and governance to lower the odds of a model misbehaving. It also features a consumer-grade user interface that can be used effectively by business managers and executives, and not the data analysts, scientists, and engineers who are accustomed to working with these tools.

Mazumdar differentiates between what he dubs “formal semantic layers,” such as Looker’s or AtScale’s semantic layers, and a context layer like he is building at WisdomAI. Formal semantic layers excel at defining relationships, metrics, and lineage, whereas context layers such as WisdomAI’s are designed to work with informal tribal knowledge, he said. “There are just things that simply does not fit a semantic model,” he said.

The knowledge that human analysts bring to the table can’t always be quantified or recorded in a formal semantic model, Mazumdar said. For instance, if an organization moved from using V1 of a calculation to V2, that exists in an analysts’ brain, he said. That informal knowledge-keeping system is more conducive to the new generation of language-based tools, but it doesn’t work so well in the more regimented, top-down systems that formal semantic models came from, he said.

“That’s the beauty of it. That is sort of like the whole reason why we have kept it natural language,” he said. “Imagine there is a new analyst who joins your team. You say, ‘Hey, read some documentation here. Let me spend an hour with you. Let me explain some of these nuances. Let me give you some old reports, so you can go and reverse engineer it. Let me give you some small, simple tasks. And let me review your work so that I can give you feedback.’

“I think that’s the way you have to treat this context model, that it starts off as a junior analyst,” Mazumdar said. “You feed it whatever formal semantic model that you have. You give it any documentation that you might have. You say, hey, start answering some questions. Then as you give me answers, I’m going to give you feedback so all of it combined together–formal, informal feedback, and then the AI analyzing all of this behind the scenes to come up with improving the context model.”

While WisdomAI can go across the network to external LLMs to answer queries, it can also work in a firewalled environment. Once its model is trained, it can answer 80% to 90% of the queries, since many of the queries are repetitions, he said. “We have a bunch of mechanisms in place to not hit the language models all the time,” he said.

WidsomAI has started to gain traction with some big names. Procurement professionals with Cisco are using the tool to help understand vendor contracts. Another is ConocoPhillips, whose analysts needed to understand telemetry diagnostics manuals.

The goal with the new Proactive Agents launched today is to take WisdomAI’s vision of contextual AI to the next level. The company says they’ll be able to learn from existing analyses to monitor and detect anomalies and patterns in data that would otherwise require a highly skilled analyst to find. The agents will also be able to perform other analyst tasks, including generating dashboards and graphs from the data, explaining underlying drivers of observations in natural language, and recommending next steps to take.

By giving everyone their own personal team of virtual data analysts, organizations will be able to scale capacity without increasing headcount, said Victor Garate, director of BI at Homestory, a WisdomAI customer.

“Before WisdomAI, our biggest bottleneck was human capital–limited by how many analysts we had and how quickly they could work,” Garate stated in a press release. “With Proactive Agents, those limits disappear. Analysis and insights scale automatically, giving our team leverage we simply couldn’t achieve before.”

Related Items:

AtScale Likes Its Odds in Race to Build Universal Semantic Layer

Beyond Words: Battle for Semantic Layer Supremacy Heats Up

Cube Ready to Become the Standard for Universal Semantic Layer, If Needed

AI, AI model, contexts layer, LLM, metrics, natural language, NLQ, semantic layer, Soham Mazumdar, sql, tribal knowledge